Comment les studios de visualisation peuvent commencer à utiliser l'IA en 2026

10 janv. 2026

En 2026, la question pour les studios de visualisation n'est plus « devrions-nous utiliser l'IA », mais « comment l'intégrer sans casser notre pipeline existant ». La phase expérimentale est passée. Nous sommes maintenant dans une période de stabilisation, où les outils d'IA sont suffisamment fiables pour respecter les délais commerciaux et suffisamment prévisibles pour les contrats clients.

Ce guide décrit des points d'entrée pratiques pour les studios qui souhaitent adopter l'IA de manière stratégique. Il se concentre sur les domaines à fort impact où l'automatisation réduit les frottements, permettant aux artistes seniors de se concentrer sur la direction artistique plutôt que sur le dépannage technique.

1/ Concept et pré-visualisation

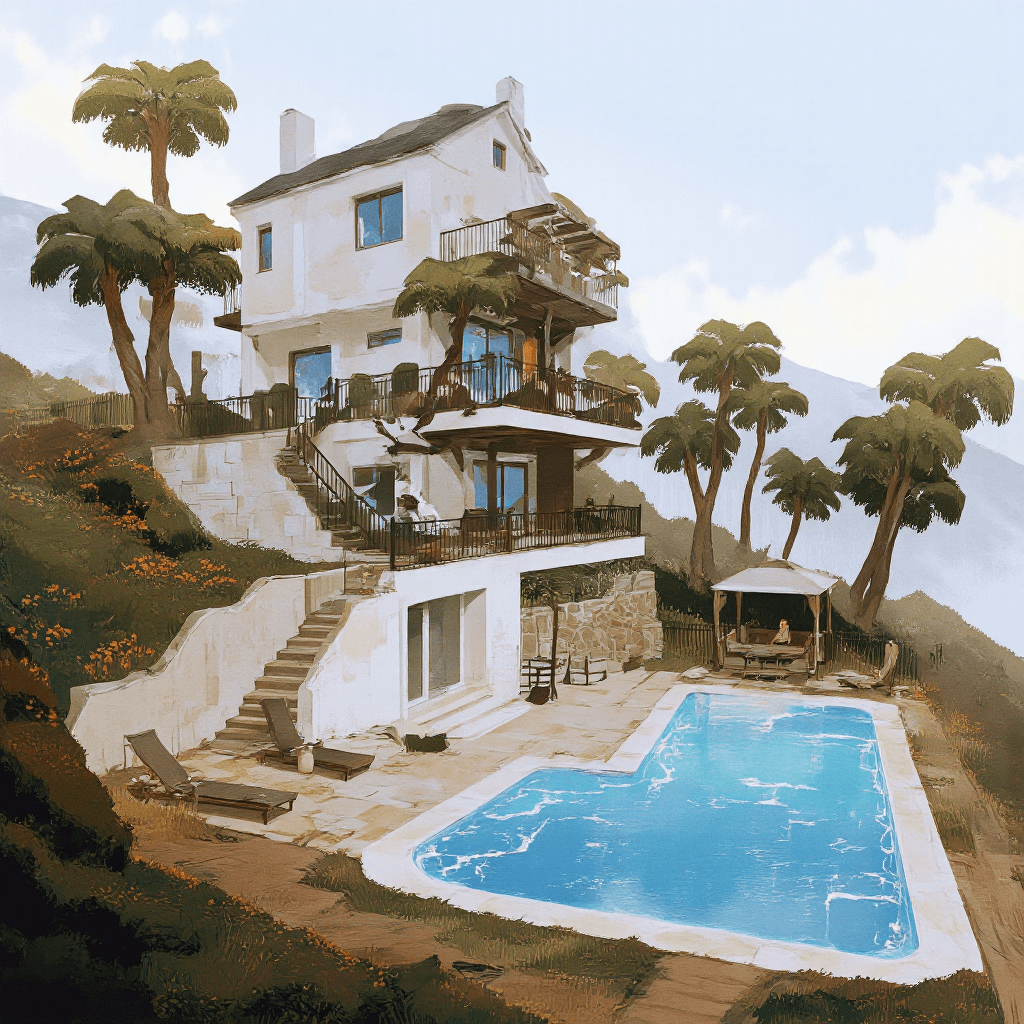

Générateurs Texte-à-Image

Ces outils génèrent des images 2D haute fidélité à partir de descriptions écrites ou d'images de référence.

Pourquoi c'est important : Cela découple la phase « ambiance » de la phase de modélisation 3D. Les studios peuvent obtenir l'approbation du client sur l'éclairage, l'atmosphère et la composition avant qu'un seul polygone ne soit modélisé.

Idéal pour : Des mood boards précoces et établir un langage visuel avec le client.

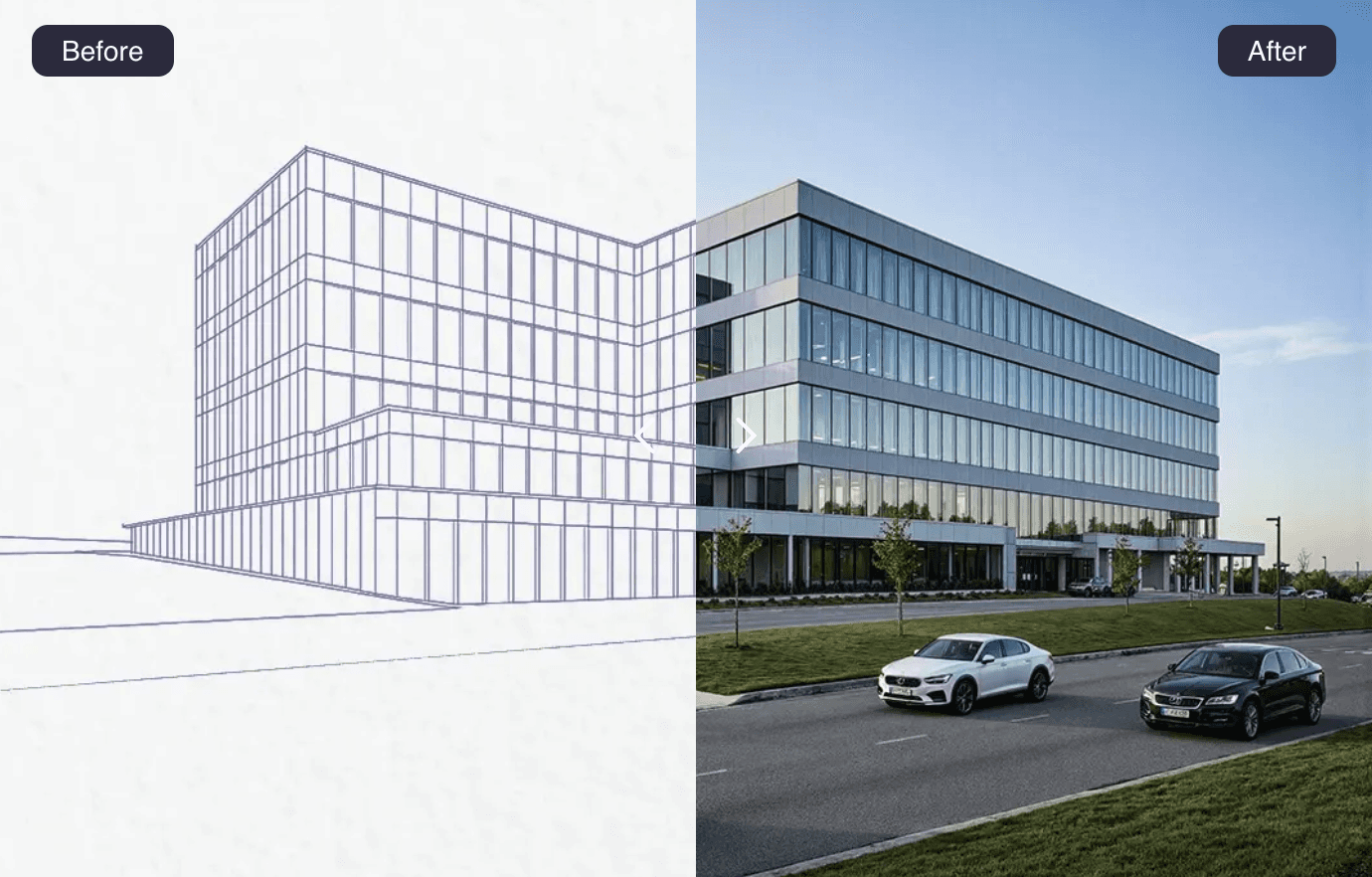

Interprétation Croquis-à-Rendu

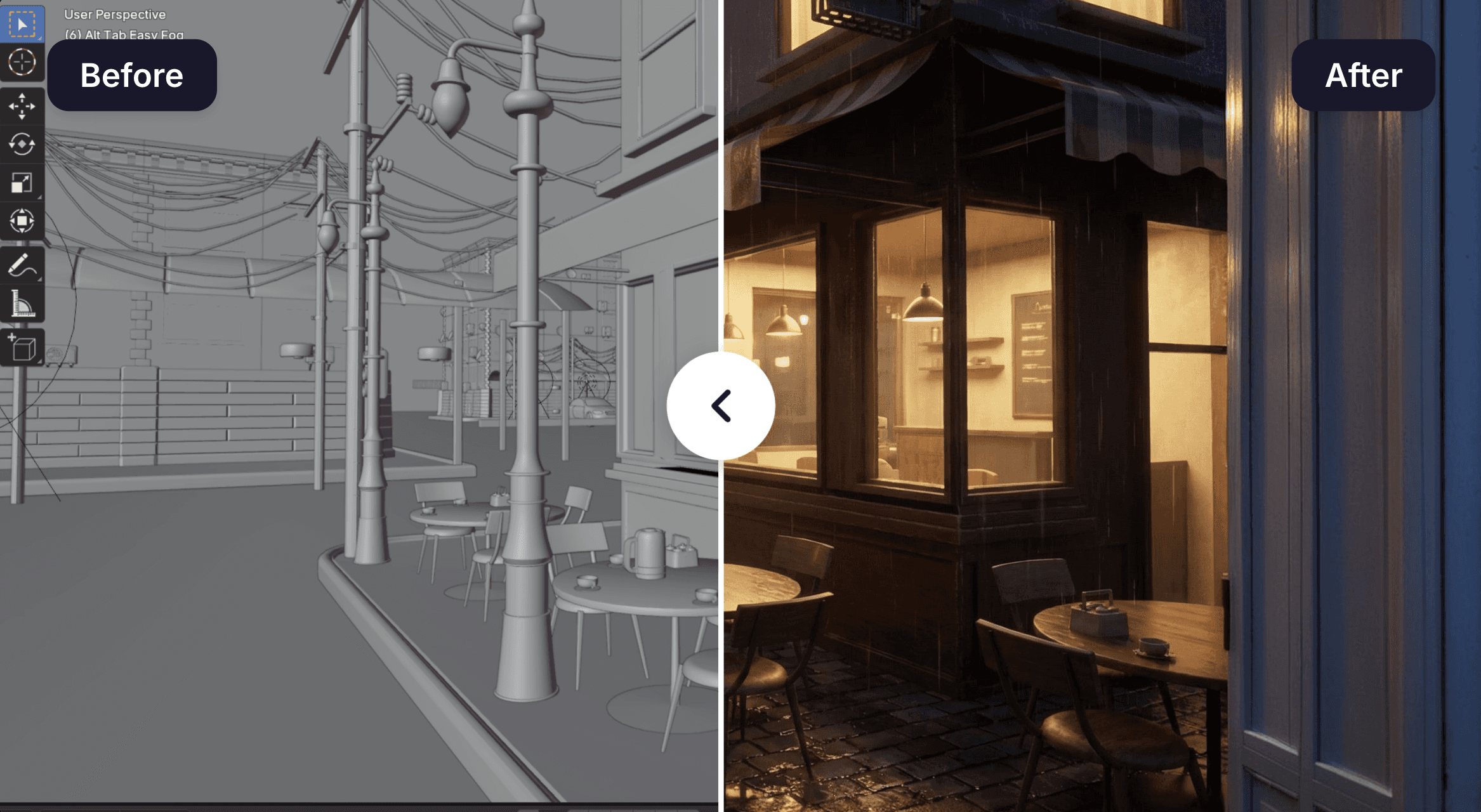

Cette approche prend un blocage approximatif ou un croquis à la main et projette des textures réalistes et un éclairage sur celui-ci, respectant la géométrie originale.

Pourquoi c'est important : Ça comble le fossé entre une idée vague et un visuel poli instantanément. Les architectes peuvent voir leurs modèles volumétriques « habillés » en quelques secondes, réduisant l'anxiété de la toile blanche.

Idéal pour : Une itération rapide lors des réunions de développement de conception.

Modèles de Transfert de Style

Ceux-ci appliquent la signature visuelle d'un artiste spécifique ou d'une image de référence à un nouveau rendu.

Pourquoi c'est important : Cela permet aux studios de maintenir un « style maison » cohérent sur différents projets ou artistes sans post-traitement manuel pour chaque image.

Idéal pour : Assurer la cohérence dans les projets d'équipes larges.

2/ Production d'Asset et d'Environnement

Génération de Textures par IA

Outils qui créent des cartes PBR (Physically Based Rendering) sans couture et haute résolution, diffuse, normal, rugosité et déplacement, à partir de requêtes texte ou de photos exemples.

Pourquoi c'est important : Trouver la texture parfaite prend souvent plus de temps que de l'appliquer. L'IA génère des matériaux sur mesure instantanément, éliminant le besoin de fouiller dans les bibliothèques pour « béton avec mousse légère ».

Idéal pour : Créer des matériaux personnalisés qui correspondent aux références spécifiques du client.

Génération d'Accessoires Texte-à-3D

Modèles génératifs qui créent la géométrie et les textures de maillage pour des objets de fond basés sur des descriptions.

Pourquoi c'est important : Les modeleurs passent un temps disproportionné sur des accessoires non-heroes comme les meubles, les plantes ou le désordre. Automatiser cela les libère pour se concentrer sur l'architecture elle-même.

Idéal pour : Peupler les scènes avec des actifs contextuels uniques (vases, livres, mobilier urbain).

Générateurs de Skybox de l'Environnement

Ceux-ci génèrent des cartes HDRi (imagerie à grande gamme dynamique) à 360 degrés pour l'éclairage et les reflets de fond.

Pourquoi c'est important : Les bibliothèques HDRi standard sont surutilisées. Les environnements d'éclairage personnalisés garantissent que le rendu ne ressemble pas à « ce ciel de stock populaire ».

Idéal pour : Créer des scénarios d'éclairage uniques pour les prises de vue extérieures.

3/ Rendu et Post-Production

Amélioration de l'IA et Réduction de Bruit

Algorithmes qui prennent un rendu brut basse résolution et bruyant et le met à l'échelle intelligemment en 4K ou 8K tout en éliminant le grain.

Pourquoi c'est important : Cela change fondamentalement les calculs économiques du rendu. Les studios peuvent rendre moins d'échantillons à des résolutions plus basses, réduisant les coûts de la ferme de rendu de 50-70%, et utiliser l'IA pour combler le fossé de qualité.

Idéal pour : Des délais serrés où un nouveau rendu en pleine qualité est impossible.

Génération d'In-painting

La capacité de sélectionner une zone spécifique d'un rendu (comme une fenêtre ou une voiture) et de la remplacer par un contenu généré qui correspond à la perspective et à l'éclairage.

Pourquoi c'est important : Cela résout le problème « 99% complet ». Si un client veut changer une poignée de porte ou enlever un arbre, vous n'avez pas besoin de refaire le rendu de la scène. Vous le corrigez en post-production.

Idéal pour : Les demandes de clients de dernière minute et la correction des erreurs 3D.

Rééclairage Basé sur Carte de Profondeur

Outils qui utilisent les informations de profondeur d'un rendu 3D pour permettre des ajustements d'éclairage après rendu.

Pourquoi c'est important : Cela offre de la flexibilité après la fin du rendu. Vous pouvez modifier l'angle du soleil ou son intensité sans rouvrir le logiciel 3D.

Idéal pour : Ajuster finement l'ambiance lors de l'étape finale de composition.

4/ Administratif et Pipeline

Tagging Automatisé des Assets

Systèmes qui analysent les bibliothèques de modèles 3D et attribuent automatiquement des tags (par exemple, « Chaise », « Moderne », « Bois », « High-Poly »).

Pourquoi c'est important : La plupart des studios ont des téraoctets d'actifs impossibles à rechercher. L'IA organise cette « décharge numérique » en une bibliothèque utilisable.

Idéal pour : Nettoyer les données de serveur héritées.

Transcription et Résumé de Réunion

Agents d'IA qui enregistrent les appels clients et extraient les exigences de conception spécifiques et les éléments d'action.

Pourquoi c'est important : Cela protège le studio de l'extension de périmètre. Avoir un enregistrement neutre et consultable de ce que le client a précisément demandé prévient les litiges « Je pensais que nous avions convenu de... ».

Idéal pour : Briefings clients et sessions de retour.

5/ Collaboration avec le Client

Assistants de Visualisation en Temps Réel

Outils qui fonctionnent en parallèle avec les logiciels 3D pour générer des rendus de « prévisualisation » en temps réel pendant que l'artiste travaille.

Pourquoi c'est important : Cela réduit la boucle de rétroaction. Les clients peuvent voir un aspect « presque final » pendant une session de travail, plutôt que d'attendre une semaine pour un test de rendu.

Idéal pour : Révisions de conception interactives.

Génération d'Image-à-Vidéo

Modèles qui prennent un rendu statique et génèrent un mouvement de caméra court et en boucle ou une animation environnementale (vent, eau).

Pourquoi c'est important : La vidéo commande des frais plus élevés que les images fixes. L'IA permet aux studios de vendre des « images vivantes » sans l'énorme charge de travail d'un rendu d'animation complet.

Idéal pour : Matériels de marketing sur les réseaux sociaux pour les développements immobiliers.

Commencer petit, mais commencer standard

> « Nous n'avons pas besoin que l'IA fasse tout. Nous avons juste besoin qu'il résolve le goulot d'étranglement. »

Les studios les plus performants en 2026 ne tentent pas d'automatiser tout leur processus créatif. Ils identifient la partie la plus lente de leur flux de travail, généralement le texturage ou le rendu, et appliquent un outil d'IA spécifique pour le résoudre.

Commencez par tester un outil pour une tâche spécifique. Une fois que cet outil prouve qu'il permet de gagner du temps sans diminuer la qualité, intégrez-le en standard dans le manuel. Puis, passez au prochain goulot.

Bonus : Le flux de travail unifié

La plupart des studios ont du mal parce qu'ils jonglent avec six abonnements d'IA différents.

Rendair AI consolide ces capacités en un seul espace de travail. Au lieu d'exporter vers un outil pour l'upscaling, un autre pour l'in-painting, et un troisième pour la vidéo, vous pouvez gérer tout le cycle de visualisation en un seul endroit. Il est conçu pour s'adapter aux flux de travail professionnels, offrant le contrôle dont les studios ont besoin avec la rapidité que les clients attendent.

Articles récents

Rejoignez plus de 500 000 architectes qui ont gagné du temps. Aucune carte de crédit requise pour vos 20 premiers crédits.