Como os Estúdios de Visualização Podem Começar a Usar IA em 2026

10/01/2026

Em 2026, a questão para os estúdios de visualização não é mais "devemos usar IA", mas "como integrá-la sem quebrar nosso pipeline existente." A fase experimental passou. Estamos agora em um período de estabilização, onde as ferramentas de IA são confiáveis o suficiente para prazos comerciais e previsíveis o suficiente para contratos com clientes.

Este guia descreve pontos de entrada práticos para estúdios que desejam adotar IA estrategicamente. Ele se concentra em áreas de alto impacto onde a automação reduz o atrito, permitindo que artistas seniores se concentrem na direção de arte em vez de na solução de problemas técnicos.

1/ Conceito e Pré-visualização

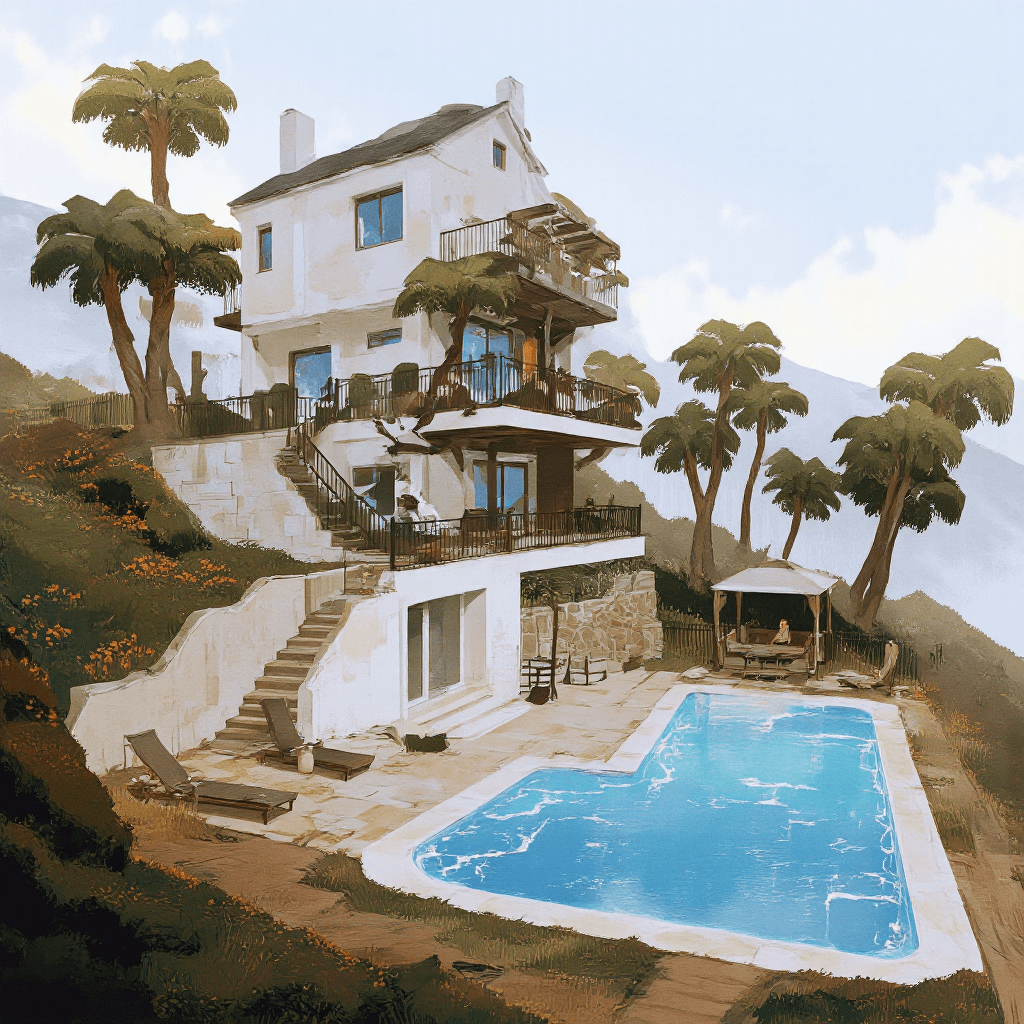

Geradores de Texto para Imagem

Estas ferramentas geram imagens 2D de alta fidelidade a partir de descrições escritas ou imagens de referência.

Por que é importante: Ele separa a fase de "humor" da fase de modelagem 3D. Os estúdios podem obter aprovação do cliente sobre iluminação, atmosfera e composição antes que um único polígono seja modelado.

Melhor para: Primeiros quadros de humor e estabelecimento de uma linguagem visual com o cliente.

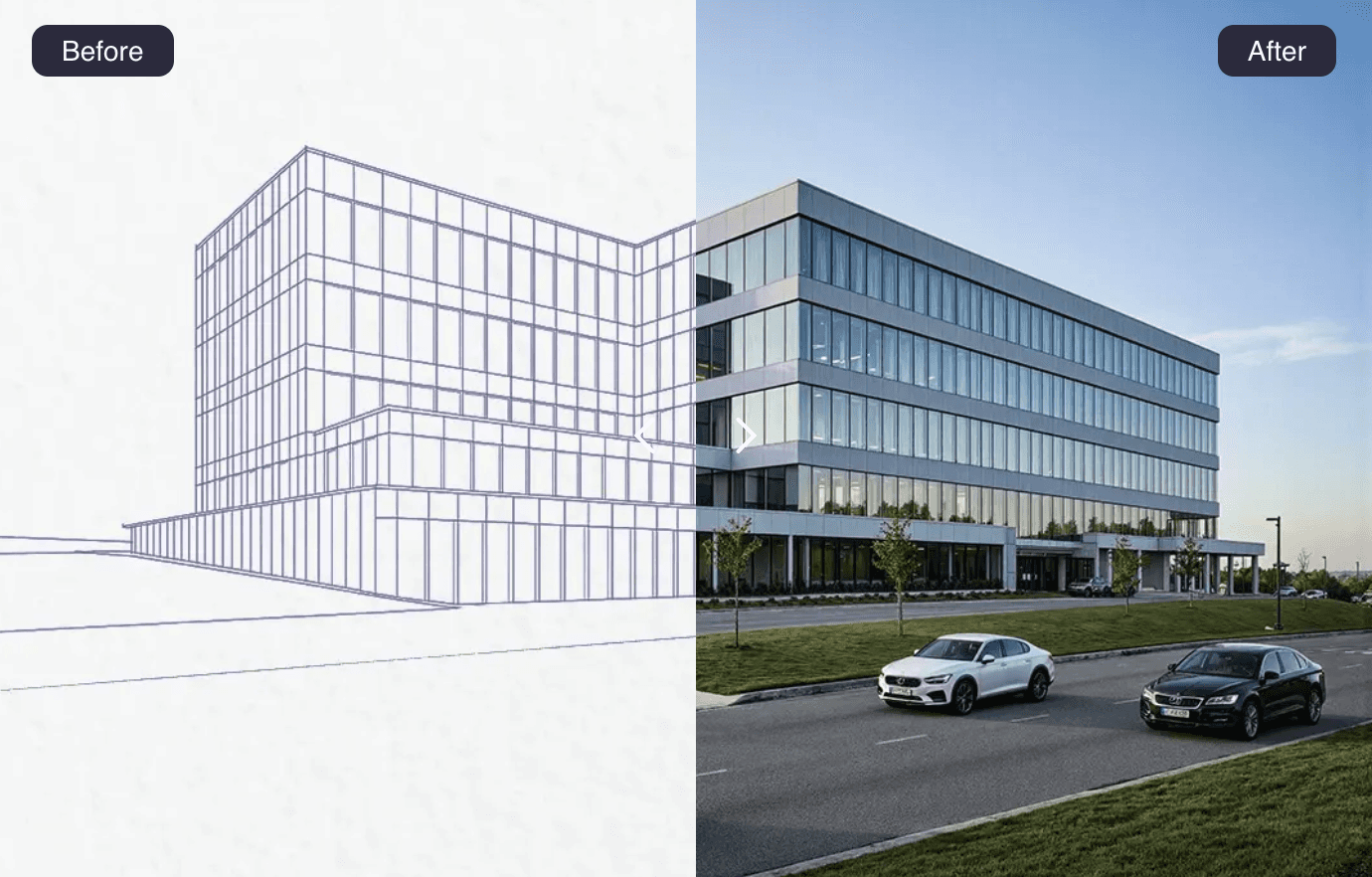

Interpretação de Esboço para Renderização

Esta abordagem pega um bloco ou esboço à mão bruto e projeta texturas realistas e iluminação sobre ele, respeitando a geometria original.

Por que é importante: Ele preenche a lacuna entre uma ideia vaga e um visual polido instantaneamente. Arquitetos podem ver seus modelos de massa "vestidos" em segundos, reduzindo a ansiedade da tela em branco.

Melhor para: Iteração rápida durante reuniões de desenvolvimento de design.

Modelos de Transferência de Estilo

Estes aplicam a assinatura visual de um artista específico ou imagem de referência a um novo render.

Por que é importante: Permite que os estúdios mantenham um "estilo da casa" consistente em diferentes projetos ou artistas sem processamento manual para cada quadro.

Melhor para: Garantir consistência em grandes projetos de equipe.

2/ Produção de Ativos e Ambiente

Geração de Textura por IA

Ferramentas que criam mapas PBR (Rendering baseado em física) de alta resolução perfeitos, difundir, normais, rugosidade e deslocamento, a partir de comandos de texto ou fotos de amostra.

Por que é importante: Encontrar a textura perfeita geralmente leva mais tempo do que aplicá-la. A IA gera materiais sob medida instantaneamente, eliminando a necessidade de vasculhar bibliotecas por "concreto com leve musgo."

Melhor para: Criar materiais personalizados que correspondem a referências específicas do cliente.

Geração de Prop{

Publicações Recentes

Junte-se a mais de 500.000 arquitetos que economizaram tempo. Não é necessário cartão de crédito para os seus primeiros 20 créditos.